sample = np.random.choice(data, 100)

sample.mean()0.5453087847471367Caleb

September 12, 2022

Have you ever heard the comment that if you draw many samples from a distribution, the sample will be of a normal distribution, and it is guaranteed by the magical Central Limit Theorem ?

Think for a moment:

Could the Central Limit Theorem just mean that the sample will be of a normal distribution if the population is of normal distribution too ? But isn’t that too trivial to be called a theorem ?

It turns out that it is the means of the samples will be of a normal distribution, not the samples themselves.

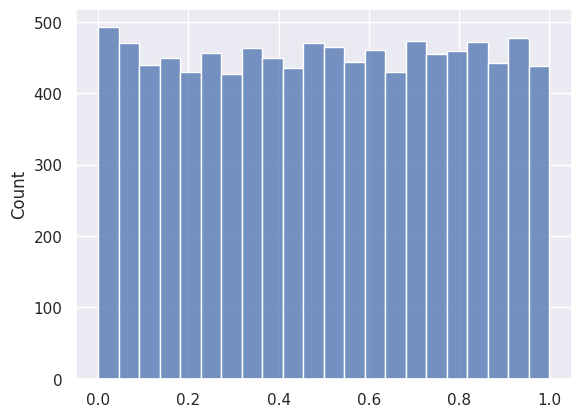

Suppose we are given a dataset consists of numbers uniformly distributed between 0 and 1.

We can randomly sample 100 data points from the population and calculate the sample mean.

The sample mean is a random variable because of the random sampling process.

0.4780188476724967

0.4884357038347728

0.49375289478957385

0.46197942889944293

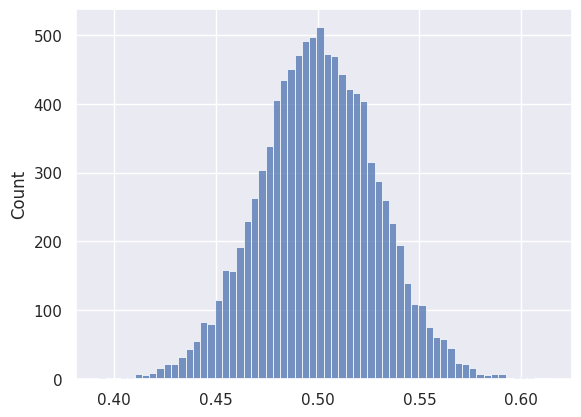

0.4611685890607802If we run the sampling process 10000 times, and plot the distribution of the sample means, we can see that it is indeed approximately of normal distribution.

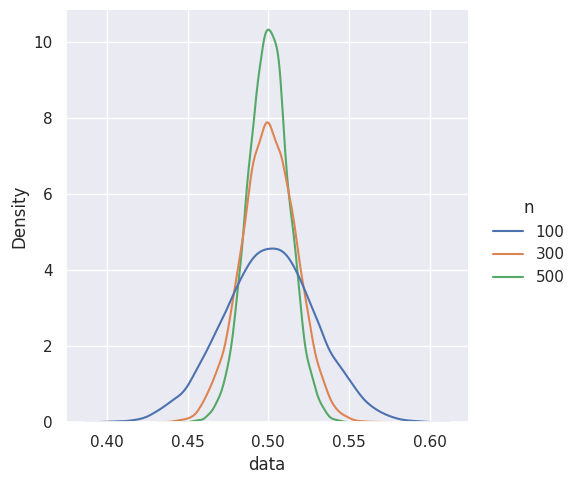

As we increase the sample size, we can see the sample mean is converging to the expected value: 0.5, that is, the sampling error decresases.

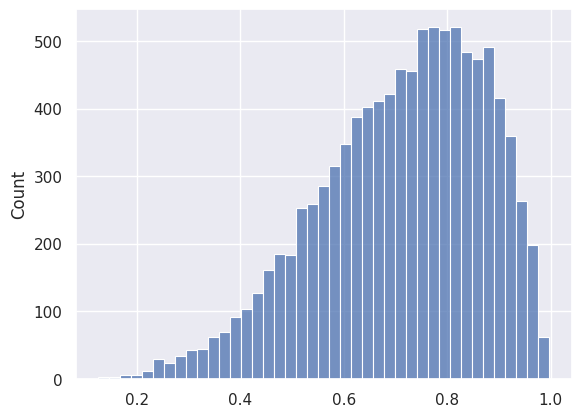

But the power of the Central Limit Theorem is that it can be applied to any distribution with a finite mean and standard deviation. So even if we are sampling from a highly skewed distribution, the sample mean itself is going to be of normal distribution, and hence balanced.

In simple term, CLT can be expressed as

The sum of \(n\) independent random variables will be approximately a normal distribution provided that each has finite variance, and that no small set of the variables contributes most of the variation.

Intuitively, if something is affected by many independent factors, that something will likely be uniformly distributed. For example, at least 180 genes contribute to human height, and we can assume that they are largely independent: gene A gives longer neck, gene B longer torso, gene C longer bones, etc. Human height is approximately normally distributed because it is caused by the sum of the independent effects of all these genes.

Thinking in this way, we can begin to see why normal distribution can be seen everywhere in the nature. It is because natural phenomena most likely have many independent small factors.